183

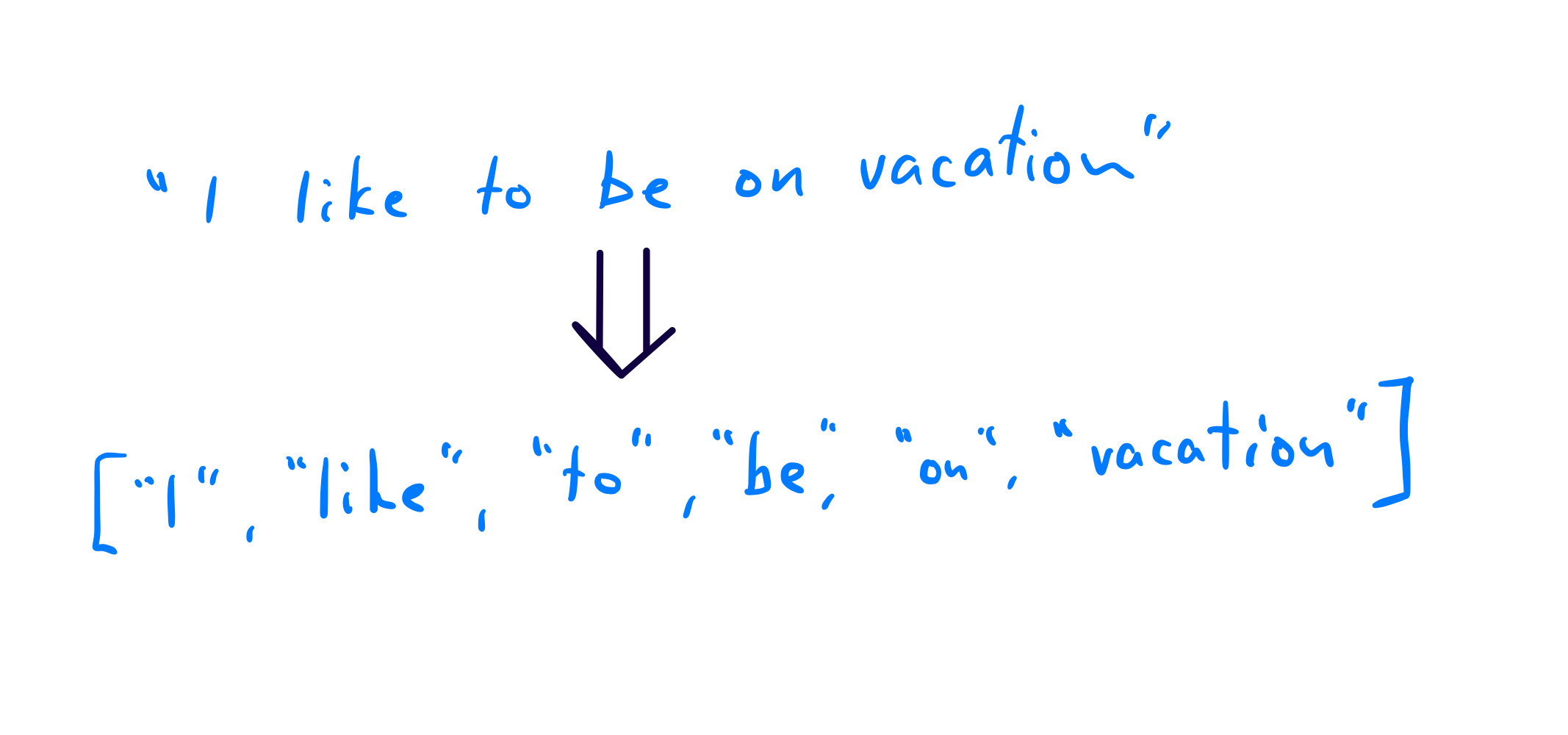

['THE', 'SISTERS', 'There', 'was', 'no', 'hope', 'for', 'him', 'this', 'time', 'it', 'was', 'the', 'third', 'stroke', 'Night', 'after', 'night', 'I', 'had', 'passed', 'the', 'house', 'it', 'was', 'vacation', 'time', 'and', 'studied', 'the', 'lighted', 'square', 'of', 'window', 'and', 'night', 'after', 'night', 'I', 'had', 'found', 'it', 'lighted', 'in', 'the', 'same', 'way', 'faintly', 'and', 'evenly', 'If', 'he', 'was', 'dead', 'I', 'thought', 'I', 'would', 'see', 'the', 'reflection', 'of', 'candles', 'on', 'the', 'darkened', 'blind', 'for', 'I', 'knew', 'that', 'two', 'candles', 'must', 'be', 'set', 'at', 'the', 'head', 'of', 'a', 'corpse', 'He', 'had', 'often', 'said', 'to', 'me', '“I', 'am', 'not', 'long', 'for', 'this', 'world”', 'and', 'I', 'had', 'thought', 'his', 'words', 'idle', 'Now', 'I', 'knew', 'they', 'were', 'true', 'Every', 'night', 'as', 'I', 'gazed', 'up', 'at', 'the', 'window', 'I', 'said', 'softly', 'to', 'myself', 'the', 'word', 'paralysis', 'It', 'had', 'always', 'sounded', 'strangely', 'in', 'my', 'ears', 'like', 'the', 'word', 'gnomon', 'in', 'the', 'Euclid', 'and', 'the', 'word', 'simony', 'in', 'the', 'Catechism', 'But', 'now', 'it', 'sounded', 'to', 'me', 'like', 'the', 'name', 'of', 'some', 'maleficent', 'and', 'sinful', 'being', 'It', 'filled', 'me', 'with', 'fear', 'and', 'yet', 'I', 'longed', 'to', 'be', 'nearer', 'to', 'it', 'and', 'to', 'look', 'upon', 'its', 'deadly', 'work']